____ _ ____

/ __ \ (_)____ / __ \ __ __ ____

/ / / // // __ \ / / / // / / // __ \

/ /_/ // // /_/ // /_/ // /_/ // /_/ /

/_____//_// .___//_____/ \__,_// .___/

/_/ /_/

DipDup is a Python framework for building smart contract indexers. It helps developers focus on business logic instead of writing a boilerplate to store and serve data. DipDup-based indexers are selective, which means only required data is requested. This approach allows to achieve faster indexing times and decreased load on underlying APIs.

-

Ready to build your first indexer? Head to Quickstart.

-

Looking for examples? Check out Demo Projects and Built with DipDup pages.

-

Want to participate? Vote for open issues, join discussions or become a sponsor.

-

Have a question? Join our Discord or tag @dipdup_io on Twitter.

This project is maintained by the Baking Bad team.

Development is supported by Tezos Foundation.

Thanks

Sponsors

Decentralized web requires decentralized funding. The following people and organizations help the project to be sustainable.

Want your project to be listed here? We have nice perks for sponsors! Visit our GitHub Sponsors page.

Contributors

We are grateful to all the people who help us with the project.

- @852Kerfunkle

- @Anshit01

- @arrijabba

- @Fitblip

- @gdsoumya

- @herohthd

- @Karantezsure

- @mystdeim

- @nikos-kalomoiris

- @pravind

- @sbihel

- @tezosmiami

- @tomsib2001

- @TristanAllaire

- @veqtor

- @xflpt

If we forgot to mention you, or you want to update your record, please, open an issue or pull request.

Quickstart

This page will guide you through the steps to get your first selective indexer up and running in a few minutes without getting too deep into the details.

Let's create an indexer for the tzBTC FA1.2 token contract. Our goal is to save all token transfers to the database and then calculate some statistics of its holders' activity.

A modern Linux/macOS distribution with Python 3.10 installed is required to run DipDup.

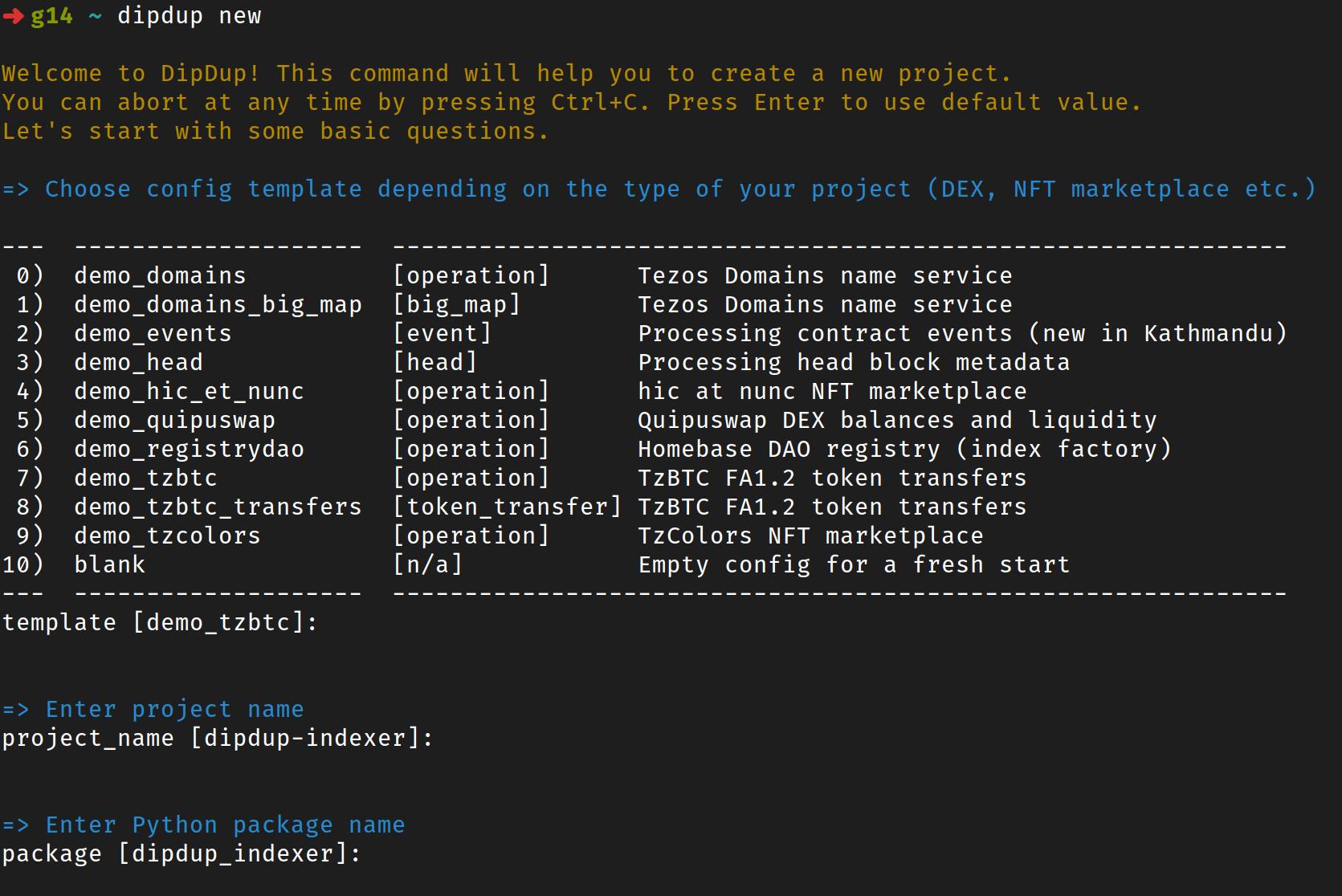

Create a new project

Interactively (recommended)

You can initialize a hello-world project interactively by choosing configuration options in the terminal. The following command will install DipDup for the current user:

curl -Lsf https://dipdup.io/install.py | python

Now, let's create a new project:

dipdup new

Follow the instructions; the project will be created in the current directory. You can skip reading the rest of this page and slap dipdup run instead.

From scratch

Currently, we mainly use Poetry for dependency management in DipDup. If you prefer hatch, pdb, piptools or others — use them instead. Below are some snippets to get you started.

# Create a new project directory

mkdir dipdup-indexer; cd dipdup-indexer

# Plain pip

python -m venv .venv

. .venv/bin/activate

pip install dipdup

# or Poetry

poetry init --python ">=3.10,<3.11"

poetry add dipdup

poetry shell

Write a configuration file

DipDup configuration is stored in YAML files of a specific format. Create a new file named dipdup.yml in your current working directory with the following content:

spec_version: 1.2

package: demo_token

database:

kind: sqlite

path: demo-token.sqlite3

contracts:

tzbtc_mainnet:

address: KT1PWx2mnDueood7fEmfbBDKx1D9BAnnXitn

typename: tzbtc

datasources:

tzkt_mainnet:

kind: tzkt

url: https://api.tzkt.io

indexes:

tzbtc_holders_mainnet:

template: tzbtc_holders

values:

contract: tzbtc_mainnet

datasource: tzkt_mainnet

templates:

tzbtc_holders:

kind: operation

datasource: <datasource>

contracts:

- <contract>

handlers:

- callback: on_transfer

pattern:

- destination: <contract>

entrypoint: transfer

- callback: on_mint

pattern:

- destination: <contract>

entrypoint: mint

Initialize project tree

Now it's time to generate typeclasses and callback stubs. Run the following command:

dipdup init

DipDup will create a Python package demo_token having the following structure:

demo_token

├── graphql

├── handlers

│ ├── __init__.py

│ ├── on_mint.py

│ └── on_transfer.py

├── hooks

│ ├── __init__.py

│ ├── on_reindex.py

│ ├── on_restart.py

│ ├── on_index_rollback.py

│ └── on_synchronized.py

├── __init__.py

├── models.py

├── sql

│ ├── on_reindex

│ ├── on_restart

│ ├── on_index_rollback

│ └── on_synchronized

└── types

├── __init__.py

└── tzbtc

├── __init__.py

├── parameter

│ ├── __init__.py

│ ├── mint.py

│ └── transfer.py

└── storage.py

That's a lot of files and directories! But don't worry, we will need only models.py and handlers modules in this guide.

Define data models

Our schema will consist of a single model Holder having several fields:

address— account addressbalance— in tzBTCvolume— total transfer/mint amount bypassedtx_count— number of transfers/mintslast_seen— time of the last transfer/mint

Put the following content in the models.py file:

from tortoise import fields

from dipdup.models import Model

class Holder(Model):

address = fields.CharField(max_length=36, pk=True)

balance = fields.DecimalField(decimal_places=8, max_digits=20, default=0)

turnover = fields.DecimalField(decimal_places=8, max_digits=20, default=0)

tx_count = fields.BigIntField(default=0)

last_seen = fields.DatetimeField(null=True)

Implement handlers

Everything's ready to implement an actual indexer logic.

Our task is to index all the balance updates, so we'll start with a helper method to handle them. Create a file named on_balance_update.py in the handlers package with the following content:

from datetime import datetime

from decimal import Decimal

import demo_token.models as models

async def on_balance_update(

address: str,

balance_update: Decimal,

timestamp: datetime,

) -> None:

holder, _ = await models.Holder.get_or_create(address=address)

holder.balance += balance_update

holder.turnover += abs(balance_update)

holder.tx_count += 1

holder.last_seen = timestamp

await holder.save()

Three methods of tzBTC contract can alter token balances — transfer, mint, and burn. The last one is omitted in this tutorial for simplicity. Edit corresponding handlers to call the on_balance_update method with data from matched operations:

on_transfer.py

from decimal import Decimal

from demo_token.handlers.on_balance_update import on_balance_update

from demo_token.types.tzbtc.parameter.transfer import TransferParameter

from demo_token.types.tzbtc.storage import TzbtcStorage

from dipdup.context import HandlerContext

from dipdup.models import Transaction

async def on_transfer(

ctx: HandlerContext,

transfer: Transaction[TransferParameter, TzbtcStorage],

) -> None:

if transfer.parameter.from_ == transfer.parameter.to:

# NOTE: Internal tzBTC transfer

return

amount = Decimal(transfer.parameter.value) / (10**8)

await on_balance_update(

address=transfer.parameter.from_,

balance_update=-amount,

timestamp=transfer.data.timestamp,

)

await on_balance_update(

address=transfer.parameter.to,

balance_update=amount,

timestamp=transfer.data.timestamp,

)

on_mint.py

from decimal import Decimal

from demo_token.handlers.on_balance_update import on_balance_update

from demo_token.types.tzbtc.parameter.mint import MintParameter

from demo_token.types.tzbtc.storage import TzbtcStorage

from dipdup.context import HandlerContext

from dipdup.models import Transaction

async def on_mint(

ctx: HandlerContext,

mint: Transaction[MintParameter, TzbtcStorage],

) -> None:

amount = Decimal(mint.parameter.value) / (10**8)

await on_balance_update(

address=mint.parameter.to,

balance_update=amount,

timestamp=mint.data.timestamp,

)

And that's all! We can run the indexer now.

Run your indexer

dipdup run

DipDup will fetch all the historical data and then switch to realtime updates. Your application data has been successfully indexed!

Getting started

This part of the docs covers the same features the Quickstart article does but is more focused on details.

Installation

This page covers the installation of DipDup in different environments.

Host requirements

A Linux/MacOS environment with Python 3.10 installed is required to use DipDup. Other UNIX-like systems should work but are not supported officially.

Minimum hardware requirements are 256 MB RAM, 1 CPU core, and some disk space for the database. RAM requirements increase with the number of indexes.

Non-UNIX environments

Windows is not officially supported, but there's a possibility everything will work fine. In case of issues throw us a message and use WSL or Docker.

We aim to improve cross-platform compatibility in future releases (issue).

Local installation

Interactively (recommended)

The following command will install DipDup for the current user:

curl -Lsf https://dipdup.io/install.py | python

This script uses pipx under the hood to install dipdup and datamodel-codegen as CLI tools. Then you can use any package manager of your choice to manage versions of DipDup and other project dependencies.

Manually

Currently, we mainly use Poetry for dependency management in DipDup. If you prefer hatch, pdb, piptools or others — use them instead. Below are some snippets to get you started.

# Create a new project directory

mkdir dipdup-indexer; cd dipdup-indexer

# Plain pip

python -m venv .venv

. .venv/bin/activate

pip install dipdup

# or Poetry

poetry init --python ">=3.10,<3.11"

poetry add dipdup

poetry shell

Docker

See 6.2. Running in Docker page.

Core concepts

Big picture

Initially, DipDup was heavily inspired by The Graph Protocol, but there are several differences. The most important one is that DipDup indexers are completely off-chain.

DipDup utilizes a microservice approach and relies heavily on existing solutions, making the SDK very lightweight and allowing developers to switch API engines on demand.

DipDup works with operation groups (explicit operation and all internal ones, a single contract call) and Big_map updates (lazy hash map structures, read more) — until fully-fledged protocol-level events are not implemented in Tezos.

Consider DipDup a set of best practices for building custom backends for decentralized applications, plus a toolkit that spares you from writing boilerplate code.

DipDup is tightly coupled with TzKT API but can generally use any data provider which implements a particular feature set. TzKT provides REST endpoints and Websocket subscriptions with flexible filters enabling selective indexing and returns "humanified" contract data, which means you don't have to handle raw Michelson expressions.

DipDup offers PostgreSQL + Hasura GraphQL Engine combo out-of-the-box to expose indexed data via REST and GraphQL with minimal configuration. However, you can use any database and API engine (e.g., write API backend in-house).

How it works

From the developer's perspective, there are three main steps for creating an indexer using the DipDup framework:

- Write a declarative configuration file containing all the inventory and indexing rules.

- Describe your domain-specific data models.

- Implement the business logic, which is how to convert blockchain data to your models.

As a result, you get a service responsible for filling the database with the indexed data.

Within this service, there can be multiple indexers running independently.

Atomicity and persistency

DipDup applies all updates atomically block by block. In case of an emergency shutdown, it can safely recover later and continue from the level it ended. DipDup state is stored in the database per index and can be used by API consumers to determine the current indexer head.

Here are a few essential things to know before running your indexer:

- Ensure that the database (or schema in the case of PostgreSQL) you're connecting to is used by DipDup exclusively. Changes in index configuration or models require DipDup to drop the whole database (schema) and start indexing from scratch. You can, however, mark specific tables as immune to preserve them from being dropped.

- Changing index config triggers reindexing. Also, do not change aliases of existing indexes in the config file without cleaning up the database first. DipDup won't handle that automatically and will treat the renamed index as new.

- Multiple indexes pointing to different contracts should not reuse the same models (unless you know what you are doing) because synchronization is done sequentially by index.

Schema migration

DipDup does not support database schema migration: if there's any model change, it will trigger reindexing. The rationale is that it's easier and faster to start over than handle migrations that can be of arbitrary complexity and do not guarantee data consistency.

DipDup stores a hash of the SQL version of the DB schema and checks for changes each time you run indexing.

Handling chain reorgs

Reorg messages signaling chain reorganizations. That means some blocks, including all operations, are rolled back in favor of another with higher fitness. Chain reorgs happen regularly (especially in testnets), so it's not something you can ignore. These messages must be handled correctly -- otherwise, you will likely accumulate duplicate or invalid data.

Singe version 6.0 DipDup processes chain reorgs seamlessly restoring a previous database state. You can implement your rollback logic by editing the on_index_rollback event hook.

Creating config

Developing a DipDup indexer begins with creating a YAML config file. You can find a minimal example to start indexing on the Quickstart page.

General structure

DipDup configuration is stored in YAML files of a specific format. By default, DipDup searches for dipdup.yml file in the current working directory, but you can provide any path with a -c CLI option.

DipDup config file consists of several logical blocks:

| Header | spec_version* | 14.15. spec_version |

package* | 14.12. package | |

| Inventory | database | 14.5. database |

contracts | 14.3. contracts | |

datasources | 14.6. datasources | |

custom | 14.4. custom | |

| Index definitions | indexes | 14.9. indexes |

templates | 14.16. templates | |

| Hook definitions | hooks | 14.8. hooks |

jobs | 14.10. jobs | |

| Integrations | hasura | 14.7. hasura |

sentry | 14.14. sentry | |

prometheus | 14.13. prometheus | |

| Tunables | advanced | 14.2. advanced |

logging | 14.11. logging |

Header contains two required fields, package and spec_version. They are used to identify the project and the version of the DipDup specification. All other fields in the config are optional.

Inventory specifies contracts that need to be indexed, datasources to fetch data from, and the database to store data in.

Index definitions define the index templates that will be used to index the contract data.

Hook definitions define callback functions that will be called manually or on schedule.

Integrations are used to integrate with third-party services.

Tunables affect the behavior of the whole framework.

Merging config files

DipDup allows you to customize the configuration for a specific environment or workflow. It works similarly to docker-compose anchors but only for top-level sections. If you want to override a nested property, you need to recreate a whole top-level section. To merge several DipDup config files, provide the -c command-line option multiple times:

dipdup -c dipdup.yml -c dipdup.prod.yml run

Run config export command if unsure about the final config used by DipDup.

Full example

This page or paragraph is yet to be written. Come back later.

Let's put it all together. The config below is an artificial example but contains almost all available options.

spec_version: 1.2

package: my_indexer

database:

kind: postgres

host: db

port: 5432

user: dipdup

password: changeme

database: dipdup

schema_name: public

immune_tables:

- token_metadata

- contract_metadata

contracts:

some_dex:

address: KT1K4EwTpbvYN9agJdjpyJm4ZZdhpUNKB3F6

typename: quipu_fa12

datasources:

tzkt_mainnet:

kind: tzkt

url: https://api.tzkt.io

my_api:

kind: http

url: https://my_api.local/v1

ipfs:

kind: ipfs

url: https://ipfs.io/ipfs

coinbase:

kind: coinbase

metadata:

kind: metadata

url: https://metadata.dipdup.net

network: mainnet

indexes:

operation_index_from_template:

template: operation_template

values:

datasource: tzkt

contract: some_dex

big_map_index_from_template:

template: big_map_template

values:

datasource: tzkt

contract: some_dex

first_level: 1

last_level: 46963

skip_history: never

factory:

kind: operation

datasource: tzkt

types:

- origination

contracts:

- some_dex

handlers:

- callback: on_factory_origination

pattern:

- type: origination

similar_to: some_dex

templates:

operation_template:

kind: operation

datasource: <datasource>

types:

- origination

- transaction

contracts:

- <contract>

handlers:

- callback: on_origination

pattern:

- type: origination

originated_contract: <contract>

- callback: on_some_call

pattern:

- type: transaction

destination: <contract>

entrypoint: some_call

big_map_template:

kind: big_map

datasource: <datasource>

handlers:

- callback: on_update_records

contract: <name_registry>

path: store.records

- callback: on_update_expiry_map

contract: <name_registry>

path: store.expiry_map

hooks:

calculate_stats:

callback: calculate_stats

atomic: False

args:

major: bool

jobs:

midnight_stats:

hook: calculate_stats

crontab: "0 0 * * *"

args:

major: True

sentry:

dsn: https://localhost

environment: dev

debug: False

prometheus:

host: 0.0.0.0

hasura:

url: http://hasura:8080

admin_secret: changeme

allow_aggregations: False

camel_case: true

select_limit: 100

advanced:

early_realtime: True

merge_subscriptions: False

postpone_jobs: False

metadata_interface: False

skip_version_check: False

scheduler:

apscheduler.job_defaults.coalesce: True

apscheduler.job_defaults.max_instances: 3

reindex:

manual: wipe

migration: exception

rollback: ignore

config_modified: exception

schema_modified: exception

rollback_depth: 2

crash_reporting: False

logging: verbose

Project structure

The structure of the DipDup project package is the following:

demo_token

├── graphql

├── handlers

│ ├── __init__.py

│ ├── on_mint.py

│ └── on_transfer.py

├── hasura

├── hooks

│ ├── __init__.py

│ ├── on_reindex.py

│ ├── on_restart.py

│ ├── on_index_rollback.py

│ └── on_synchronized.py

├── __init__.py

├── models.py

├── sql

│ ├── on_reindex

│ ├── on_restart

│ ├── on_index_rollback

│ └── on_synchronized

└── types

├── __init__.py

└── tzbtc

├── __init__.py

├── parameter

│ ├── __init__.py

│ ├── mint.py

│ └── transfer.py

└── storage.py

| path | description |

|---|---|

graphql | GraphQL queries for Hasura (*.graphql) |

handlers | User-defined callbacks to process matched operations and big map diffs |

hasura | Arbitrary Hasura metadata (*.json) |

hooks | User-defined callbacks to run manually or by schedule |

models.py | Tortoise ORM models |

sql | SQL scripts to run from callbacks (*.sql) |

types | Codegened Pydantic typeclasses for contract storage/parameter |

DipDup will generate all the necessary directories and files inside the project's root on init command. These include contract type definitions and callback stubs to be implemented by the developer.

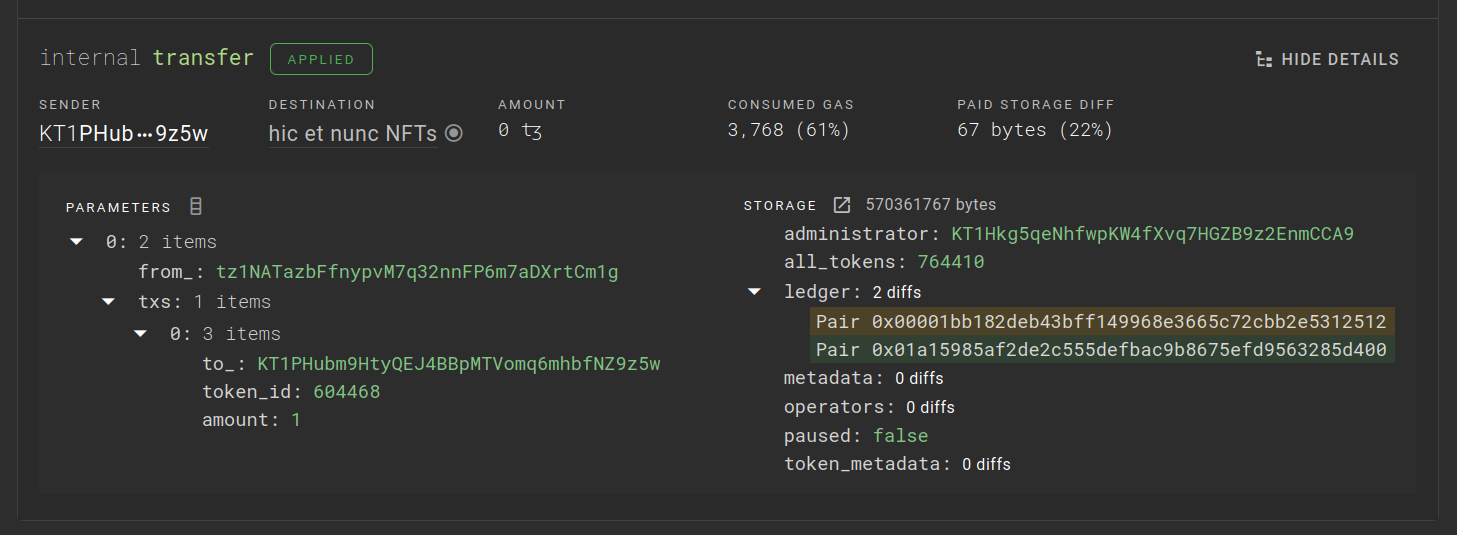

Type classes

DipDup receives all smart contract data (transaction parameters, resulting storage, big_map updates) in normalized form (read more about how TzKT handles Michelson expressions) but still as raw JSON. DipDup uses contract type information to generate data classes, which allow developers to work with strictly typed data.

DipDup generates Pydantic models out of JSONSchema. You might want to install additional plugins (PyCharm, mypy) for convenient work with this library.

The following models are created at init for different indexes:

operation: storage type for all contracts in handler patterns plus parameter type for all destination+entrypoint pairs.big_map: key and storage types for all used contracts and big map paths.event: payload types for all used contracts and tags.

Other index kinds do not use code generated types.

Nested packages

Callback modules don't have to be in top-level hooks/handlers directories. Add one or multiple dots to the callback name to define nested packages:

package: indexer

hooks:

foo.bar:

callback: foo.bar

After running the init command, you'll get the following directory tree (shortened for readability):

indexer

├── hooks

│ ├── foo

│ │ ├── bar.py

│ │ └── __init__.py

│ └── __init__.py

└── sql

└── foo

└── bar

└── .keep

The same rules apply to handler callbacks. Note that the callback field must be a valid Python package name - lowercase letters, underscores, and dots.

Defining models

DipDup uses the Tortoise ORM library to cover database operations. During initialization, DipDup generates a models.py file on the top level of the package that will contain all database models. The name and location of this file cannot be changed.

A typical models.py file looks like the following (example from demo_domains package):

from typing import Optional

from tortoise import fields

from tortoise.fields.relational import ForeignKeyFieldInstance

from dipdup.models import Model

class TLD(Model):

id = fields.CharField(max_length=255, pk=True)

owner = fields.CharField(max_length=36)

class Domain(Model):

id = fields.CharField(max_length=255, pk=True)

tld: ForeignKeyFieldInstance[TLD] = fields.ForeignKeyField('models.TLD', 'domains')

expiry = fields.DatetimeField(null=True)

owner = fields.CharField(max_length=36)

token_id = fields.BigIntField(null=True)

tld_id: Optional[str]

class Record(Model):

id = fields.CharField(max_length=255, pk=True)

domain: ForeignKeyFieldInstance[Domain] = fields.ForeignKeyField('models.Domain', 'records')

address = fields.CharField(max_length=36, null=True)

See the links below to learn how to use this library.

Limitations

Some limitations are applied to model names and fields to avoid ambiguity in GraphQL API.

- Table names must be in

snake_case - Model fields must be in

snake_case - Model fields must differ from table name

Implementing handlers

DipDup generates a separate file with a callback stub for each handler in every index specified in the configuration file.

In the case of the transaction handler, the callback method signature is the following:

from <package>.types.<typename>.parameter.entrypoint_foo import EntryPointFooParameter

from <package>.types.<typename>.parameter.entrypoint_bar import EntryPointBarParameter

from <package>.types.<typename>.storage import TypeNameStorage

async def on_transaction(

ctx: HandlerContext,

entrypoint_foo: Transaction[EntryPointFooParameter, TypeNameStorage],

entrypoint_bar: Transaction[EntryPointBarParameter, TypeNameStorage]

) -> None:

...

where:

entrypoint_foo ... entrypoint_barare items from the according to handler pattern.ctx: HandlerContextprovides useful helpers and contains an internal state (see ).- A

Transactionmodel contains transaction typed parameter and storage, plus other fields.

For the origination case, the handler signature will look similar:

from <package>.types.<typename>.storage import TypeNameStorage

async def on_origination(

ctx: HandlerContext,

origination: Origination[TypeNameStorage],

)

An Origination model contains the origination script, initial storage (typed), amount, delegate, etc.

A Big_map update handler will look like the following:

from <package>.types.<typename>.big_map.<path>_key import PathKey

from <package>.types.<typename>.big_map.<path>_value import PathValue

async def on_update(

ctx: HandlerContext,

update: BigMapDiff[PathKey, PathValue],

)

BigMapDiff contains action (allocate, update, or remove), nullable key and value (typed).

Naming conventions

Python language requires all module and function names in snake case and all class names in pascal case.

A typical imports section of big_map handler callback looks like this:

from <package>.types.<typename>.storage import TypeNameStorage

from <package>.types.<typename>.parameter.<entrypoint> import EntryPointParameter

from <package>.types.<typename>.big_map.<path>_key import PathKey

from <package>.types.<typename>.big_map.<path>_value import PathValue

Here typename is defined in the contract inventory, entrypoint is specified in the handler pattern, and path is in the handler config.

Handling name collisions

Indexing operations of multiple contracts with the same entrypoints can lead to name collisions during code generation. In this case DipDup raises a ConfigurationError and suggests to set alias for each conflicting handler. That applies to operation indexes only. Consider the following index definition, some kind of "chain minting" contract:

kind: operation

handlers:

- callback: on_mint

pattern:

- type: transaction

entrypoint: mint

alias: foo_mint

- type: transaction

entrypoint: mint

alias: bar_mint

The following code will be generated for on_mint callback:

from example.types.foo.parameter.mint import MintParameter as FooMintParameter

from example.types.foo.storage import FooStorage

from example.types.bar.parameter.mint import MintParameter as BarMintParameter

from example.types.bar.storage import BarStorage

async def on_transaction(

ctx: HandlerContext,

foo_mint: Transaction[FooMintParameter, FooStorage],

bar_mint: Transaction[BarMintParameter, BarStorage]

) -> None:

...

You can safely change argument names if you want to.

Templates and variables

Environment variables

DipDup supports compose-style variable expansion with optional default value:

database:

kind: postgres

host: ${POSTGRES_HOST:-localhost}

password: ${POSTGRES_PASSWORD}

You can use environment variables anywhere throughout the configuration file. Consider the following example (absolutely useless but illustrative):

custom:

${FOO}: ${BAR:-bar}

${FIZZ:-fizz}: ${BUZZ}

Running FOO=foo BUZZ=buzz dipdup config export --unsafe will produce the following output:

custom:

fizz: buzz

foo: bar

Use this feature to store sensitive data outside of the configuration file and make your app fully declarative.

Index templates

Templates allow you to reuse index configuration, e.g., for different networks (mainnet/ghostnet) or multiple contracts sharing the same codebase.

templates:

my_template:

kind: operation

datasource: <datasource>

contracts:

- <contract>

handlers:

- callback: callback

pattern:

- destination: <contract>

entrypoint: call

Templates have the same syntax as indexes of all kinds; the only difference is that they additionally support placeholders enabling parameterization:

field: <placeholder>

The template above can be resolved in the following way:

contracts:

some_dex: ...

datasources:

tzkt: ...

indexes:

my_template_instance:

template: my_template

values:

datasource: tzkt_mainnet

contract: some_dex

Any string value wrapped in angle brackets is treated as a placeholder, so make sure there are no collisions with the actual values. You can use a single placeholder multiple times. In contradiction to environment variables, dictionary keys cannot be placeholders.

An index created from a template must have a value for each placeholder; the exception is raised otherwise. These values are available in the handler context as ctx.template_values dictionary.

You can also spawn indexes from templates in runtime. To achieve the same effect as above, you can use the following code:

ctx.add_index(

name='my_template_instance',

template='my_template',

values={

'datasource': 'tzkt_mainnet',

'contract': 'some_dex',

},

)

Indexes

Index — is a primary DipDup entity connecting the inventory and specifying data handling rules.

Each index has a linked TzKT datasource and a set of handlers. Indexes can join multiple contracts considered as a single application. Also, contracts can be used by multiple indexes of any kind, but make sure that data don't overlap. See 2.2. Core concepts → atomicity-and-persistency.

indexes:

contract_operations:

kind: operation

datasource: tzkt_mainnet

handlers:

- callback: on_operation

pattern: ...

Multiple indexes are available for different kinds of blockchain data. Currently, the following options are available:

big_mapeventheadoperationtoken_transfer

Every index is linked to specific datasource from 14.6. datasources config section.

Using templates

Index definitions can be templated to reduce the amount of boilerplate code. To create an index from the template during startup, add an item with the template and values field to the indexes section:

templates:

operation_index_template:

kind: operation

datasource: <datasource>

...

indexes:

template_instance:

template: operation_index_template

values:

datasource: tzkt_mainnet

You can also create indexes from templates later in runtime. See 2.7. Templates and variables page.

Indexing scope

One can optionally specify block levels DipDup has to start and stop indexing at, e.g., there's a new version of the contract, and there's no need to track the old one anymore.

indexes:

my_index:

first_level: 1000000

last_level: 2000000

big_map index

Big maps are lazy structures allowing to access and update only exact keys. Gas costs for these operations doesn't depend on the size of a big map, but you can't iterate over it's keys onchain.

big_map index allows querying only updates of specific big maps. In some cases, it can drastically reduce the amount of data transferred and thus indexing speed compared to fetching all operations.

indexes:

token_big_map_index:

kind: big_map

datasource: tzkt

skip_history: never

handlers:

- callback: on_ledger_update

contract: token

path: data.ledger

- callback: on_token_metadata_update

contract: token

path: token_metadata

Handlers

Each big map handler contains three required fields:

callback— a name of async function with a particular signature; DipDup will search for it in<package>.handlers.<callback>module.contract— big map parent contractpath— path to the big map in the contract storage (use dot as a delimiter)

Index only the current state

When the skip_history field is set to once, DipDup will skip historical changes only on initial sync and switch to regular indexing afterward. When the value is always, DipDup will fetch all big map keys on every restart. Preferrable mode depends on your workload.

All big map diffs DipDup passes to handlers during fast sync have the action field set to BigMapAction.ADD_KEY. Remember that DipDup fetches all keys in this mode, including ones removed from the big map. You can filter them out later by BigMapDiff.data.active field if needed.

event index

Kathmandu Tezos protocol upgrade has introduced contract events, a new way to interact with smart contracts. This index allows indexing events using strictly typed payloads. From the developer's perspective, it's similar to the big_map index with a few differences.

An example below is artificial since no known contracts in mainnet are currently using events.

contract: events_contract

tag: move

- callback: on_roll_event

contract: events_contract

tag: roll

- callback: on_other_event

contract: events_contract

Unlike big maps, contracts may introduce new event tags and payloads at any time, so the index must be updated accordingly.

async def on_move_event(

ctx: HandlerContext,

event: Event[MovePayload],

) -> None:

...

Each contract can have a fallback handler called for all unknown events so you can process untyped data.

async def on_other_event(

ctx: HandlerContext,

event: UnknownEvent,

) -> None:

...

head index

This very simple index provides metadata of the latest block when it's baked. Only realtime data is processed; the synchronization stage is skipped for this index.

spec_version: 1.2

package: demo_head

database:

kind: sqlite

path: demo-head.sqlite3

datasources:

tzkt_mainnet:

kind: tzkt

url: ${TZKT_URL:-https://api.tzkt.io}

indexes:

mainnet_head:

kind: head

datasource: tzkt_mainnet

handlers:

- callback: on_mainnet_head

Head index callback receives HeadBlockData model that contains only basic info; no operations are included. Being useless by itself, this index is helpful for monitoring and cron-like tasks. You can define multiple indexes for each datasource used.

Subscription to the head channel is enabled by default, even if no head indexes are defined. Each time the block is baked, the dipdup_head table is updated per datasource. Use it to ensure that both index datasource and underlying blockchain are up and running.

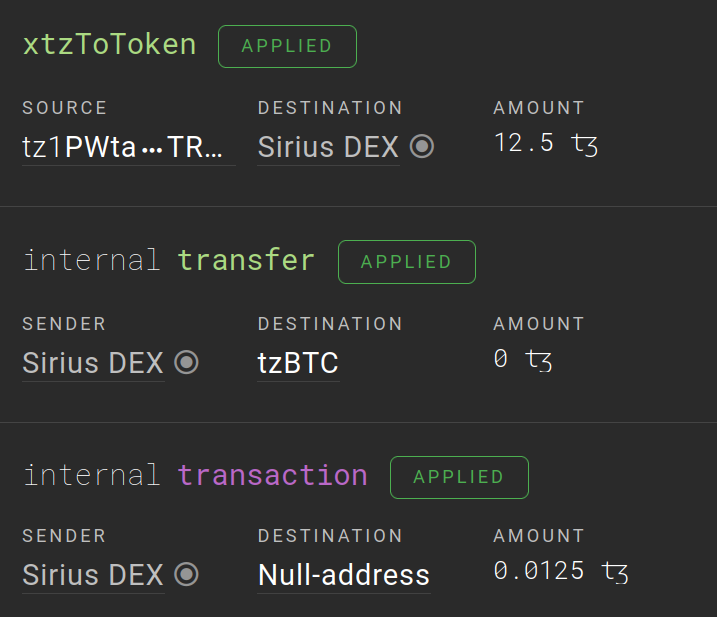

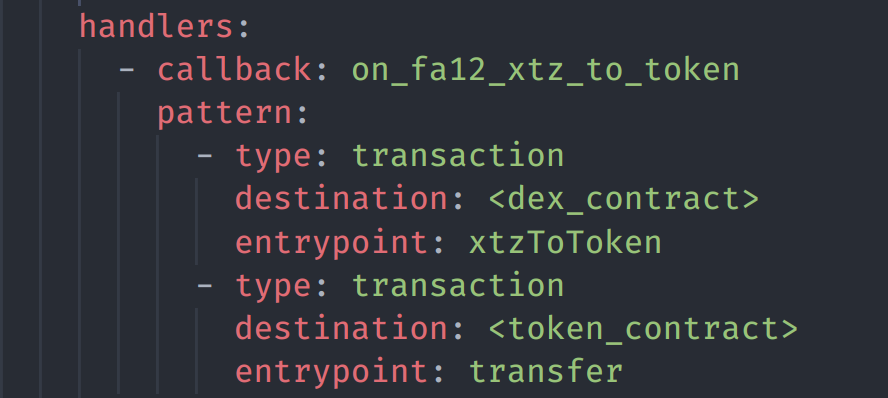

operation index

Operation index allows you to query only operations related to your dapp and match them with handlers by content. A single contract call consists of implicit operation and, optionally, internal operations. For each of them, you can specify a handler that will be called when the operation group matches. As a result, you get something like an event log for your dapp.

Handlers

Each operation handler contains two required fields:

callback— a name of async function with a particular signature; DipDup will search for it in<package>.handlers.<callback>module.pattern— a non-empty list of items that need to be matched.

indexes:

my_index:

kind: operation

datasource: tzkt

contracts:

- some_contract

handlers:

- callback: on_call

pattern:

- destination: some_contract

entrypoint: transfer

You can think of the operation pattern as a regular expression on a sequence of operations (both external and internal) with a global flag enabled (there can be multiple matches). Multiple operation parameters can be used for matching (source, destination, etc.).

You will get slightly different callback argument types depending on whether pattern item is typed or not. If so, DipDup will generate the dataclass for a particular entrypoint/storage, otherwise you will have to handle untyped parameters/storage updates stored in OperationData model.

Matching originations

| name | description | supported | typed |

|---|---|---|---|

originated_contract.address | Origination of exact contract. | ✅ | ✅ |

originated_contract.code_hash | Originations of all contracts having the same code. | ✅ | ✅ |

source.address | Special cases only. This filter is very slow and doesn't support strict typing. Usually, originated_contract.code_hash suits better. | ⚠ | ❌ |

source.code_hash | Currently not supported. | ❌ | ❌ |

similar_to.address | Compatibility alias to originated_contract.code_hash. Can be removed some day. | ➡️ | ➡️ |

similar_to.code_hash | Compatibility alias to originated_contract.code_hash. Can be removed some day. | ➡️ | ➡️ |

Matching transactions

| name | description | supported | typed |

|---|---|---|---|

source.address | Sent by exact address. | ✅ | N/A |

source.code_hash | Sent by any contract having this code hash | ✅ | N/A |

destination.address | Invoked contract address | ✅ | ✅ |

destination.code_hash | Invoked contract code hash | ✅ | ✅ |

destination.entrypoint | Entrypoint called | ✅ | ✅ |

Optional items

Pattern items have optional field to continue matching even if this item is not found. It's usually unnecessary to match the entire operation content; you can skip external/internal calls that are not relevant. However, there is a limitation: optional items cannot be followed by operations ignored by the pattern.

pattern:

# Implicit transaction

- destination: some_contract

entrypoint: mint

# Internal transactions below

- destination: another_contract

entrypoint: transfer

- source: some_contract

type: transaction

Specifying contracts to index

DipDup will try to guess the list of used contracts by handlers' signatures. If you want to specify it explicitly, use contracts field:

indexes:

my_index:

kind: operation

datasource: tzkt

contracts:

- foo

- bar

Specifying operation types

By default, DipDup processes only transactions, but you can enable other operation types you want to process (currently, transaction, origination, and migration are supported).

indexes:

my_index:

kind: operation

datasource: tzkt

types:

- transaction

- origination

- migration

token_transfer index

This index allows indexing token transfers of contracts compatible with FA1.2 or FA2 standards.

spec_version: 1.2

package: demo_token_transfers

database:

kind: sqlite

path: demo-token-transfers.sqlite3

contracts:

tzbtc_mainnet:

address: KT1PWx2mnDueood7fEmfbBDKx1D9BAnnXitn

typename: tzbtc

datasources:

tzkt:

kind: tzkt

url: https://api.tzkt.io

indexes:

tzbtc_holders_mainnet:

kind: token_transfer

datasource: tzkt

handlers:

- callback: on_token_transfer

contract: tzbtc_mainnet

Callback receives TokenTransferData model that optionally contains the transfer sender, receiver, amount, and token metadata.

from decimal import Decimal

from decimal import InvalidOperation

from demo_token_transfers.handlers.on_balance_update import on_balance_update

from dipdup.context import HandlerContext

from dipdup.models import TokenTransferData

async def on_token_transfer(

ctx: HandlerContext,

token_transfer: TokenTransferData,

) -> None:

from_, to = token_transfer.from_address, token_transfer.to_address

if not from_ or not to or from_ == to:

return

try:

amount = Decimal(token_transfer.amount or 0) / (10**8)

except InvalidOperation:

return

if not amount:

return

await on_balance_update(address=from_, balance_update=-amount, timestamp=token_transfer.timestamp)

await on_balance_update(address=to, balance_update=amount, timestamp=token_transfer.timestamp)

GraphQL API

In this section, we assume you use Hasura GraphQL Engine integration to power your API.

Before starting to do client integration, it's good to know the specifics of Hasura GraphQL protocol implementation and the general state of the GQL ecosystem.

Queries

By default, Hasura generates three types of queries for each table in your schema:

- Generic query enabling filters by all columns

- Single item query (by primary key)

- Aggregation query (can be disabled in config)

All the GQL features such as fragments, variables, aliases, directives are supported, as well as batching.

Read more in Hasura docs.

It's important to understand that a GraphQL query is just a POST request with JSON payload, and in some instances, you don't need a complicated library to talk to your backend.

Pagination

By default, Hasura does not restrict the number of rows returned per request, which could lead to abuses and a heavy load on your server. You can set up limits in the configuration file. See 14.7. hasura → limit-number-of-rows. But then, you will face the need to paginate over the items if the response does not fit the limits.

Subscriptions

From Hasura documentation:

Hasura GraphQL engine subscriptions are live queries, i.e., a subscription will return the latest result of the query and not necessarily all the individual events leading up to it.

This feature is essential to avoid complex state management (merging query results and subscription feed). In most scenarios, live queries are what you need to sync the latest changes from the backend.

If the live query has a significant response size that does not fit into the limits, you need one of the following:

- Paginate with offset (which is not convenient)

- Use cursor-based pagination (e.g., by an increasing unique id).

- Narrow down request scope with filtering (e.g., by timestamp or level).

Ultimately you can get "subscriptions" on top of live quires by requesting all the items having ID greater than the maximum existing or all the items with a timestamp greater than now.

Websocket transport

Hasura is compatible with subscriptions-transport-ws library, which is currently deprecated but still used by most clients.

Mutations

The purpose of DipDup is to create indexers, which means you can consistently reproduce the state as long as data sources are accessible. It makes your backend "stateless", meaning tolerant to data loss.

However, you might need to introduce a non-recoverable state and mix indexed and user-generated content in some cases. DipDup allows marking these UGC tables "immune", protecting them from being wiped. In addition to that, you will need to set up Hasura Auth and adjust write permissions for the tables (by default, they are read-only).

Lastly, you will need to execute GQL mutations to modify the state from the client side. Read more about how to do that with Hasura.

Hasura integration

DipDup uses this optional section to configure the Hasura engine to track your tables automatically.

hasura:

url: http://hasura:8080

admin_secret: ${HASURA_ADMIN_SECRET:-changeme}

If you have enabled this integration, DipDup will generate Hasura metadata based on your DB schema and apply it using Metadata API.

Hasura metadata is all about data representation in GraphQL API. The structure of the database itself is managed solely by Tortoise ORM.

Metadata configuration is idempotent: each time you call run or hasura configure command, DipDup queries the existing schema and updates metadata if required. DipDup configures Hasura after reindexing, saves the hash of resulting metadata in the dipdup_schema table, and doesn't touch Hasura until needed.

Database limitations

The current version of Hasura GraphQL Engine treats public and other schemas differently. Table schema.customer becomes schema_customer root field (or schemaCustomer if camel_case option is enabled in DipDup config). Table public.customer becomes customer field, without schema prefix. There's no way to remove this prefix for now. You can track related issue on Hasura's GitHub to know when the situation will change. Starting with 3.0.0-rc1, DipDup enforces public schema name to avoid ambiguity and issues with the GenQL library. You can still use any schema name if Hasura integration is not enabled.

Unauthorized access

DipDup creates user role that allows querying /graphql endpoint without authorization. All tables are set to read-only for this role.

You can limit the maximum number of rows such queries return and also disable aggregation queries automatically generated by Hasura:

hasura:

select_limit: 100

allow_aggregations: False

Note that with limits enabled, you have to use either offset or cursor-based pagination on the client-side.

Convert field names to camel case

For those of you from the JavaScript world, it may be more familiar to use camelCase for variable names instead of snake_case Hasura uses by default. DipDup now allows to convert all fields in metadata to this casing:

hasura:

camel_case: true

Now this example query to hic et nunc demo indexer...

query MyQuery {

hic_et_nunc_token(limit: 1) {

id

creator_id

}

}

...will become this one:

query MyQuery {

hicEtNuncToken(limit: 1) {

id

creatorId

}

}

All fields auto-generated by Hasura will be renamed accordingly: hic_et_nunc_token_by_pk to hicEtNuncTokenByPk, delete_hic_et_nunc_token to deleteHicEtNuncToken and so on. To return to defaults, set camel_case to False and run hasura configure --force.

Remember that "camelcasing" is a separate stage performed after all tables are registered. So during configuration, you can observe fields in snake_case for several seconds even if conversion to camel case is enabled.

Custom Hasura Metadata

There are some cases where you want to apply custom modifications to the Hasura metadata. For example, assume that your database schema has a view that contains data from the main table, in which case you cannot set a foreign key between them. Then you can place files with a .json extension in the hasura directory of your project with the content in Hasura query format, and DipDup will execute them in alphabetical order of file names when the indexing is complete.

The format of the queries can be found in the Metadata API documentation.

Feature flag allow_inconsistent_metadata set in hasura configuration section allows users to modify the behavior of the requests error handling. By default, this value is False.

REST endpoints

Hasura 2.0 introduced the ability to expose arbitrary GraphQL queries as REST endpoints. By default, DipDup will generate GET and POST endpoints to fetch rows by primary key for all tables:

curl http://127.0.0.1:8080/api/rest/hicEtNuncHolder?address=tz1UBZUkXpKGhYsP5KtzDNqLLchwF4uHrGjw

{

"hicEtNuncHolderByPk": {

"address": "tz1UBZUkXpKGhYsP5KtzDNqLLchwF4uHrGjw"

}

}

However, there's a limitation dictated by how Hasura parses HTTP requests: only models with primary keys of basic types (int, string, and so on) can be fetched with GET requests. An attempt to fetch model with BIGINT primary key will lead to the error: Expected bigint for variable id got Number.

A workaround to fetching any model is to send a POST request containing a JSON payload with a single key:

curl -d '{"id": 152}' http://127.0.0.1:8080/api/rest/hicEtNuncToken

{

"hicEtNuncTokenByPk": {

"creatorId": "tz1UBZUkXpKGhYsP5KtzDNqLLchwF4uHrGjw",

"id": 152,

"level": 1365242,

"supply": 1,

"timestamp": "2021-03-01T03:39:21+00:00"

}

}

We hope to get rid of this limitation someday and will let you know as soon as it happens.

Custom endpoints

You can put any number of .graphql files into graphql directory in your project's root, and DipDup will create REST endpoints for each of those queries. Let's say we want to fetch not only a specific token, but also the number of all tokens minted by its creator:

query token_and_mint_count($id: bigint) {

hicEtNuncToken(where: {id: {_eq: $id}}) {

creator {

address

tokens_aggregate {

aggregate {

count

}

}

}

id

level

supply

timestamp

}

}

Save this query as graphql/token_and_mint_count.graphql and run dipdup configure-hasura. Now, this query is available via REST endpoint at http://127.0.0.1:8080/api/rest/token_and_mint_count.

You can disable exposing of REST endpoints in the config:

hasura:

rest: False

GenQL

GenQL is a great library and CLI tool that automatically generates a fully typed SDK with a built-in GQL client. It works flawlessly with Hasura and is recommended for DipDup on the client-side.

Project structure

GenQL CLI generates a ready-to-use package, compiled and prepared to publish to NPM. A typical setup is a mono repository containing several packages, including the auto-generated SDK and your front-end application.

project_root/

├── package.json

└── packages/

├── app/

│ ├── package.json

│ └── src/

└── sdk/

└── package.json

SDK package config

Your minimal package.json file will look like the following:

{

"name": "%PACKAGE_NAME%",

"version": "0.0.1",

"main": "dist/index.js",

"types": "dist/index.d.ts",

"devDependencies": {

"@genql/cli": "^2.6.0"

},

"dependencies": {

"@genql/runtime": "2.6.0",

"graphql": "^15.5.0"

},

"scripts": {

"build": "genql --endpoint %GRAPHQL_ENDPOINT% --output ./dist"

}

}

That's it! Now you only need to install dependencies and execute the build target:

yarn

yarn build

Read more about CLI options available.

Demo

Create a package.json file with

%PACKAGE_NAME%=>metadata-sdk%GRAPHQL_ENDPOINT%=>https://metadata.dipdup.net/v1/graphql

And generate the client:

yarn

yarn build

Then create new file index.ts and paste this query:

import { createClient, everything } from './dist'

const client = createClient()

client.chain.query

.token_metadata({ where: { network: { _eq: 'mainnet' } }})

.get({ ...everything })

.then(res => console.log(res))

We need some additional dependencies to run our sample:

yarn add typescript ts-node

Finally:

npx ts-node index.ts

You should see a list of tokens with metadata attached in your console.

Advanced usage

In this section, you will find information about advanced DipDup features.

Datasources

Datasources are DipDup connectors to various APIs. The table below shows how different datasources can be used.

Index datasource is the one used by DipDup internally to process specific index (set with datasource: ... in config). Currently, it can be only tzkt. Datasources available in context can be accessed in handlers and hooks via ctx.get_<kind>_datasource() methods and used to perform arbitrary requests. Finally, standalone services implement a subset of DipDup datasources and config directives. You can't use services-specific datasources like tezos-node in the main framework, they are here for informational purposes only.

| index | context | mempool service | metadata service | |

|---|---|---|---|---|

tzkt | ✴ | ✅ | ✴ | ✴ |

tezos-node | ❌ | ❌ | ✴ | ❌ |

coinbase | ❌ | ✅ | ❌ | ❌ |

metadata | ❌ | ✅ | ❌ | ❌ |

ipfs | ❌ | ✅ | ❌ | ❌ |

http | ❌ | ✅ | ❌ | ❌ |

✴ required ✅ supported ❌ not supported

TzKT

TzKT provides REST endpoints to query historical data and SignalR (Websocket) subscriptions to get realtime updates. Flexible filters allow you to request only data needed for your application and drastically speed up the indexing process.

datasources:

tzkt_mainnet:

kind: tzkt

url: https://api.tzkt.io

The number of items in each request can be configured with batch_size directive. Affects request number and memory usage.

datasources:

tzkt_mainnet:

http:

...

batch_size: 10000

The rest HTTP tunables are the same as for other datasources.

Also, you can wait for several block confirmations before processing the operations:

datasources:

tzkt_mainnet:

...

buffer_size: 1 # indexing with a single block lag

Since 6.0 chain reorgs are processed automatically, but you may find this feature useful for other cases.

Tezos node

Tezos RPC is a standard interface provided by the Tezos node. This datasource is used solely by mempool and metadata standalone services; you can't use it in regular DipDup indexes.

datasources:

tezos_node_mainnet:

kind: tezos-node

url: https://mainnet-tezos.giganode.io

Coinbase

A connector for Coinbase Pro API. Provides get_candles and get_oracle_data methods. It may be useful in enriching indexes of DeFi contracts with off-chain data.

datasources:

coinbase:

kind: coinbase

Please note that Coinbase can't replace TzKT being an index datasource. But you can access it via ctx.datasources mapping both within handler and job callbacks.

DipDup Metadata

dipdup-metadata is a standalone companion indexer for DipDup written in Go. Configure datasource in the following way:

datasources:

metadata:

kind: metadata

url: https://metadata.dipdup.net

network: mainnet | ithacanet

Then, in your hook or handler code:

datasource = ctx.get_metadata_datasource('metadata')

token_metadata = await datasource.get_token_metadata('KT1...', '0')

IPFS

While working with contract/token metadata, a typical scenario is to fetch it from IPFS. DipDup has a separate datasource to perform such requests via public nodes.

datasources:

ipfs:

kind: ipfs

url: https://ipfs.io/ipfs

You can use this datasource within any callback. Output is either JSON or binary data.

ipfs = ctx.get_ipfs_datasource('ipfs')

file = await ipfs.get('QmdCz7XGkBtd5DFmpDPDN3KFRmpkQHJsDgGiG16cgVbUYu')

assert file[:4].decode()[1:] == 'PDF'

file = await ipfs.get('QmSgSC7geYH3Ae4SpUHy4KutxqNH9ESKBGXoCN4JQdbtEz/package.json')

assert file['name'] == 'json-buffer'

HTTP (generic)

If you need to perform arbitrary requests to APIs not supported by DipDup, use generic HTTP datasource instead of plain aiohttp requests. That way you can use the same features DipDup uses for internal requests: retry with backoff, rate limiting, Prometheus integration etc.

datasources:

my_api:

kind: http

url: https://my_api.local/v1

api = ctx.get_http_datasource('my_api')

response = await api.request(

method='get',

url='hello', # relative to URL in config

weigth=1, # ratelimiter leaky-bucket drops

params={

'foo': 'bar',

},

)

All DipDup datasources are inherited from http, so you can send arbitrary requests with any datasource. Let's say you want to fetch the protocol of the chain you're currently indexing (tzkt datasource doesn't have a separate method for it):

tzkt = ctx.get_tzkt_datasource('tzkt_mainnet')

protocol_json = await tzkt.request(

method='get',

url='v1/protocols/current',

)

assert protocol_json['hash'] == 'PtHangz2aRngywmSRGGvrcTyMbbdpWdpFKuS4uMWxg2RaH9i1qx'

Datasource HTTP connection parameters (ratelimit, retry with backoff, etc.) are applied on every request.

Hooks

Hooks are user-defined callbacks called either from the ctx.fire_hook method or by the job scheduler.

Let's assume we want to calculate some statistics on-demand to avoid blocking an indexer with heavy computations. Add the following lines to the DipDup config:

hooks:

calculate_stats:

callback: calculate_stats

atomic: False

args:

major: bool

depth: int

Here are a couple of things here to pay attention to:

- An

atomicoption defines whether the hook callback will be wrapped in a single SQL transaction or not. If this option is set to true main indexing loop will be blocked until hook execution is complete. Some statements, likeREFRESH MATERIALIZED VIEW, do not require to be wrapped in transactions, so choosing a value of theatomicoption could decrease the time needed to perform initial indexing. - Values of

argsmapping are used as type hints in a signature of a generated callback. We will return to this topic later in this article.

Now it's time to call dipdup init. The following files will be created in the project's root:

├── hooks

│ └── calculate_stats.py

└── sql

└── calculate_stats

└── .keep

Content of the generated callback stub:

from dipdup.context import HookContext

async def calculate_stats(

ctx: HookContext,

major: bool,

depth: int,

) -> None:

await ctx.execute_sql('calculate_stats')

By default, hooks execute SQL scripts from the corresponding subdirectory of sql/. Remove or comment out the execute_sql call to prevent this. This way, both Python and SQL code may be executed in a single hook if needed.

Arguments typechecking

DipDup will ensure that arguments passed to the hooks have the correct types when possible. CallbackTypeError exception will be raised otherwise. Values of an args mapping in a hook config should be either built-in types or __qualname__ of external type like decimal.Decimal. Generic types are not supported: hints like Optional[int] = None will be correctly parsed during codegen but ignored on type checking.

Event hooks

Every DipDup project has multiple event hooks (previously "default hooks"); they fire on system-wide events and, like regular hooks, are not linked to any index. Names of those hooks are reserved; you can't use them in config. It's also impossible to fire them manually or with a job scheduler.

on_restart

This hook executes right before starting indexing. It allows configuring DipDup in runtime based on data from external sources. Datasources are already initialized at execution and available at ctx.datasources. You can, for example, configure logging here or add contracts and indexes in runtime instead of from static config.

on_reindex

This hook fires after the database are re-initialized after reindexing (wipe). Helpful in modifying schema with arbitrary SQL scripts before indexing.

on_synchronized

This hook fires when every active index reaches a realtime state. Here you can clear caches internal caches or do other cleanups.

on_index_rollback

Fires when TzKT datasource has received a chain reorg message which can't be processed by dropping buffered messages (buffer_size option).

Since version 6.0 this hook performs a database-level rollback by default. If it doesn't work for you for some reason remove ctx.rollback call and implement your own rollback logic.

Job scheduler

Jobs are schedules for hooks. In some cases, it may come in handy to have the ability to run some code on schedule. For example, you want to calculate statistics once per hour instead of every time handler gets matched.

Add the following section to the DipDup config:

jobs:

midnight_stats:

hook: calculate_stats

crontab: "0 0 * * *"

args:

major: True

leet_stats:

hook: calculate_stats

interval: 1337 # in seconds

args:

major: False

If you're unfamiliar with the crontab syntax, an online service crontab.guru will help you build the desired expression.

Scheduler configuration

DipDup utilizes apscheduler library to run hooks according to schedules in jobs config section. In the following example, apscheduler will spawn up to three instances of the same job every time the trigger is fired, even if previous runs are in progress:

advanced:

scheduler:

apscheduler.job_defaults.coalesce: True

apscheduler.job_defaults.max_instances: 3

See apscheduler docs for details.

Note that you can't use executors from apscheduler.executors.pool module - ConfigurationError exception will be raised.

Reindexing

In some cases, DipDup can't proceed with indexing without a full wipe. Several reasons trigger reindexing:

| reason | description |

|---|---|

manual | Reindexing triggered manually from callback with ctx.reindex. |

migration | Applied migration requires reindexing. Check release notes before switching between major DipDup versions to be prepared. |

rollback | Reorg message received from TzKT can not be processed. |

config_modified | One of the index configs has been modified. |

schema_modified | Database schema has been modified. Try to avoid manual schema modifications in favor of 5.7. SQL scripts. |

It is possible to configure desirable action on reindexing triggered by a specific reason.

| action | description |

|---|---|

exception (default) | Raise ReindexingRequiredError and quit with error code. The safest option since you can trigger reindexing accidentally, e.g., by a typo in config. Don't forget to set up the correct restart policy when using it with containers. |

wipe | Drop the whole database and start indexing from scratch. Be careful with this option! |

ignore | Ignore the event and continue indexing as usual. It can lead to unexpected side-effects up to data corruption; make sure you know what you are doing. |

To configure actions for each reason, add the following section to the DipDup config:

advanced:

...

reindex:

manual: wipe

migration: exception

rollback: ignore

config_modified: exception

schema_modified: exception

Feature flags

Feature flags set in advanced config section allow users to modify parameters that affect the behavior of the whole framework. Choosing the right combination of flags for an indexer project can improve performance, reduce RAM consumption, or enable useful features.

| flag | description |

|---|---|

crash_reporting | Enable sending crash reports to the Baking Bad team |

early_realtime | Start collecting realtime messages while sync is in progress |

merge_subscriptions | Subscribe to all operations/big map diffs during realtime indexing |

metadata_interface | Enable contract and token metadata interfaces |

postpone_jobs | Do not start the job scheduler until all indexes are synchronized |

skip_version_check | Disable warning about running unstable or out-of-date DipDup version |

Crash reporting

Enables sending crash reports to the Baking Bad team. This is disabled by default. You can inspect crash dumps saved as /tmp/dipdup/crashdumps/XXXXXXX.json before enabling this option.

Early realtime

By default, DipDup enters a sync state twice: before and after establishing a realtime connection. This flag allows collecting realtime messages while the sync is in progress, right after indexes load.

Let's consider two scenarios:

-

Indexing 10 contracts with 10 000 operations each. Initial indexing could take several hours. There is no need to accumulate incoming operations since resync time after establishing a realtime connection depends on the contract number, thus taking a negligible amount of time.

-

Indexing 10 000 contracts with 10 operations each. Both initial sync and resync will take a while. But the number of operations received during this time won't affect RAM consumption much.

If you do not have strict RAM constraints, it's recommended to enable this flag. You'll get faster indexing times and decreased load on TzKT API.

Merge subscriptions

Subscribe to all operations/big map diffs during realtime indexing instead of separate channels. This flag helps to avoid the 10.000 subscription limit of TzKT and speed up processing. The downside is an increased RAM consumption during sync, especially if early_realtime flag is enabled too.

Metadata interface

Without this flag calling ctx.update_contract_metadata and ctx.update_token_metadata methods will have no effect. Corresponding internal tables are created on reindexing in any way.

Postpone jobs

Do not start the job scheduler until all indexes are synchronized. If your jobs perform some calculations that make sense only after the indexer has reached realtime, this toggle can save you some IOPS.

Skip version check

Disables warning about running unstable or out-of-date DipDup version.

Internal environment variables

DipDup uses multiple environment variables internally. They read once on process start and usually do not change during runtime. Some variables modify the framework's behavior, while others are informational.

Please note that they are not currently a part of the public API and can be changed without notice.

| env variable | module path | description |

|---|---|---|

DIPDUP_CI | dipdup.env.CI | Running in GitHub Actions |

DIPDUP_DOCKER | dipdup.env.DOCKER | Running in Docker |

DIPDUP_DOCKER_IMAGE | dipdup.env.DOCKER_IMAGE | Base image used when building Docker image (default, slim or pytezos) |

DIPDUP_NEXT | dipdup.env.NEXT | Enable features thar require schema changes |

DIPDUP_PACKAGE_PATH | dipdup.env.PACKAGE_PATH | Path to the currently used package |

DIPDUP_REPLAY_PATH | dipdup.env.REPLAY_PATH | Path to datasource replay files; used in tests |

DIPDUP_TEST | dipdup.env.TEST | Running in pytest |

DIPDUP_NEXT flag will give you the picture of what's coming in the next major release, but enabling it on existing schema will trigger a reindexing.

SQL scripts

Put your *.sql scripts to <package>/sql. You can run these scripts from any callback with ctx.execute_sql('name'). If name is a directory, each script it contains will be executed.

Scripts are executed without being wrapped with SQL transactions. It's generally a good idea to avoid touching table data in scripts.

SQL scripts are ignored if SQLite is used as a database backend.

By default, an empty sql/<hook_name> directory is generated for every hook in config during init. Remove ctx.execute_sql call from hook callback to avoid executing them.

Event hooks

Scripts from sql/on_restart directory are executed each time you run DipDup. Those scripts may contain CREATE OR REPLACE VIEW or similar non-destructive operations.

Scripts from sql/on_reindex directory are executed after the database schema is created based on the models.py module but before indexing starts. It may be useful to change the database schema in ways that are not supported by the Tortoise ORM, e.g., to create a composite primary key.

Improving performance

This page contains tips that may help to increase indexing speed.

Optimize database schema

Postgres indexes are tables that Postgres can use to speed up data lookup. A database index acts like a pointer to data in a table, just like an index in a printed book. If you look in the index first, you will find the data much quicker than searching the whole book (or — in this case — database).

You should add indexes on columns often appearing in WHERE clauses in your GraphQL queries and subscriptions.

Tortoise ORM uses BTree indexes by default. To set index on a field, add index=True to the field definition:

from dipdup.models import Model

from tortoise import fields

class Trade(Model):

id = fields.BigIntField(pk=True)

amount = fields.BigIntField()

level = fields.BigIntField(index=True)

timestamp = fields.DatetimeField(index=True)

Tune datasources

All datasources now share the same code under the hood to communicate with underlying APIs via HTTP. Configs of all datasources and also Hasura's one can have an optional section http with any number of the following parameters set:

datasources:

tzkt:

kind: tzkt

...

http:

retry_count: 10

retry_sleep: 1

retry_multiplier: 1.2

ratelimit_rate: 100

ratelimit_period: 60

connection_limit: 25

batch_size: 10000

hasura:

url: http://hasura:8080

http:

...

| field | description |

|---|---|

retry_count | Number of retries after request failed before giving up |

retry_sleep | Sleep time between retries |

retry_multiplier | Multiplier for sleep time between retries |

ratelimit_rate | Number of requests per period ("drops" in leaky bucket) |

ratelimit_period | Period for rate limiting in seconds |

connection_limit | Number of simultaneous connections |

connection_timeout | Connection timeout in seconds |

batch_size | Number of items fetched in a single paginated request (for some APIs) |

Each datasource has its defaults. Usually, there's no reason to alter these settings unless you use self-hosted instances of TzKT or other datasource.

By default, DipDup retries failed requests infinitely, exponentially increasing the delay between attempts. Set retry_count parameter to limit the number of attempts.

batch_size parameter is TzKT-specific. By default, DipDup limit requests to 10000 items, the maximum value allowed on public instances provided by Baking Bad. Decreasing this value will reduce the time required for TzKT to process a single request and thus reduce the load. By reducing the connection_limit parameter, you can achieve the same effect (limited to synchronizing multiple indexes concurrently).

See 12.4. datasources for details.

Use TimescaleDB for time-series

This page or paragraph is yet to be written. Come back later.

DipDup is fully compatible with TimescaleDB. Try its "continuous aggregates" feature, especially if dealing with market data like DEX quotes.

Cache commonly used models

If your indexer contains models having few fields and used primarily on relations, you can cache such models during synchronization.

Example code:

class Trader(Model):

address = fields.CharField(36, pk=True)

class TraderCache:

def __init__(self, size: int = 1000) -> None:

self._size = size

self._traders: OrderedDict[str, Trader] = OrderedDict()

async def get(self, address: str) -> Trader:

if address not in self._traders:

# NOTE: Already created on origination

self._traders[address], _ = await Trader.get_or_create(address=address)

if len(self._traders) > self._size:

self._traders.popitem(last=False)

return self._traders[address]

trader_cache = TraderCache()

Use trader_cache.get in handlers. After sync is complete, you can clear this cache to free some RAM:

async def on_synchronized(

ctx: HookContext,

) -> None:

...

models.trader_cache.clear()

Perform heavy computations in separate processes

It's impossible to use apscheduler pool executors with hooks because HookContext is not pickle-serializable. So, they are forbidden now in advanced.scheduler config. However, thread/process pools can come in handy in many situations, and it would be nice to have them in DipDup context. For now, I can suggest implementing custom commands as a workaround to perform any resource-hungry tasks within them. Put the following code in <project>/cli.py:

from contextlib import AsyncExitStack

import asyncclick as click

from dipdup.cli import cli, cli_wrapper

from dipdup.config import DipDupConfig

from dipdup.context import DipDupContext

from dipdup.utils.database import tortoise_wrapper

@cli.command(help='Run heavy calculations')

@click.pass_context

@cli_wrapper

async def do_something_heavy(ctx):

config: DipDupConfig = ctx.obj.config

url = config.database.connection_string

models = f'{config.package}.models'

async with AsyncExitStack() as stack:

await stack.enter_async_context(tortoise_wrapper(url, models))

...

if __name__ == '__main__':

cli(prog_name='dipdup', standalone_mode=False)

Then use python -m <project>.cli instead of dipdup as an entrypoint. Now you can call do-something-heavy like any other dipdup command. dipdup.cli:cli group handles arguments and config parsing, graceful shutdown, and other boilerplate. The rest is on you; use dipdup.dipdup:DipDup.run as a reference. And keep in mind that Tortoise ORM is not thread-safe. I aim to implement ctx.pool_apply and ctx.pool_map methods to execute code in pools with magic within existing DipDup hooks, but no ETA yet.

Callback context (ctx)

An instance of the HandlerContext class is passed to every handler providing a set of helper methods and read-only properties.

Reference

- class dipdup.context.DipDupContext(datasources, config, callbacks, transactions)¶

Common execution context for handler and hook callbacks.

- Parameters:

datasources (dict[str, dipdup.datasources.datasource.Datasource]) – Mapping of available datasources

config (DipDupConfig) – DipDup configuration

logger – Context-aware logger instance

callbacks (CallbackManager) –

transactions (TransactionManager) –

- class dipdup.context.HandlerContext(datasources, config, callbacks, transactions, logger, handler_config, datasource)¶

Execution context of handler callbacks.

- Parameters:

handler_config (HandlerConfig) – Configuration of the current handler

datasource (TzktDatasource) – Index datasource instance

datasources (dict[str, dipdup.datasources.datasource.Datasource]) –

config (DipDupConfig) –

callbacks (CallbackManager) –

transactions (TransactionManager) –

logger (FormattedLogger) –

- class dipdup.context.HookContext(datasources, config, callbacks, transactions, logger, hook_config)¶

Execution context of hook callbacks.

- Parameters:

hook_config (HookConfig) – Configuration of the current hook

datasources (dict[str, dipdup.datasources.datasource.Datasource]) –

config (DipDupConfig) –

callbacks (CallbackManager) –

transactions (TransactionManager) –

logger (FormattedLogger) –

- async DipDupContext.add_contract(name, address=None, typename=None, code_hash=None)¶

Adds contract to the inventory.

- Parameters:

name (str) – Contract name

address (str | None) – Contract address

typename (str | None) – Alias for the contract script

code_hash (str | int | None) – Contract code hash

- Return type:

None

- async DipDupContext.add_index(name, template, values, first_level=0, last_level=0, state=None)¶

Adds a new contract to the inventory.

- Parameters:

name (str) – Index name

template (str) – Index template to use

values (dict[str, Any]) – Mapping of values to fill template with

first_level (int) –

last_level (int) –

state (Index | None) –

- Return type:

None

- async DipDupContext.execute_sql(name, *args, **kwargs)¶

Executes SQL script(s) with given name.

If the name path is a directory, all .sql scripts within it will be executed in alphabetical order.

- Parameters:

name (str) – File or directory within project’s sql directory

args (Any) –

kwargs (Any) –

- Return type:

None

- async DipDupContext.execute_sql_query(name, *args)¶

Executes SQL query with given name

- Parameters:

name (str) – SQL query name within <project>/sql directory

args (Any) –

- Return type:

Any

- async DipDupContext.fire_hook(name, fmt=None, wait=True, *args, **kwargs)¶

Fire hook with given name and arguments.

- Parameters:

name (str) – Hook name

fmt (str | None) – Format string for ctx.logger messages

wait (bool) – Wait for hook to finish or fire and forget

args (Any) –

kwargs (Any) –

- Return type:

None

- DipDupContext.get_coinbase_datasource(name)¶

Get coinbase datasource by name

- Parameters:

name (str) –

- Return type:

CoinbaseDatasource

- DipDupContext.get_http_datasource(name)¶

Get http datasource by name

- Parameters:

name (str) –

- Return type:

HttpDatasource

- DipDupContext.get_ipfs_datasource(name)¶

Get ipfs datasource by name

- Parameters:

name (str) –

- Return type:

IpfsDatasource

- DipDupContext.get_metadata_datasource(name)¶

Get metadata datasource by name

- Parameters:

name (str) –

- Return type:

MetadataDatasource

- DipDupContext.get_tzkt_datasource(name)¶

Get tzkt datasource by name

- Parameters:

name (str) –

- Return type:

TzktDatasource

- async DipDupContext.reindex(reason=None, **context)¶

Drops the entire database and starts the indexing process from scratch.

- Parameters:

reason (str | ReindexingReason | None) – Reason for reindexing in free-form string